Naturally I’ve been following all the “AI” developments lately, trying it out, and listening / thinking about its implications. If you already follow me, or saw the April Fools’ day “AI” we launched, you’ll have a pretty good idea of my general thoughts on it.

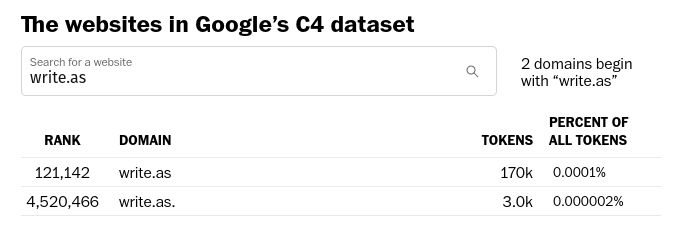

This recent Washington Post article brought the whole extraction side to light again, showing how these tools “train” on all the lovely language we publish on this writing platform of ours, among other places across the web. Here’s 170,000 “tokens” from Write.as making it into Google’s dataset alone:

Feedback wanted

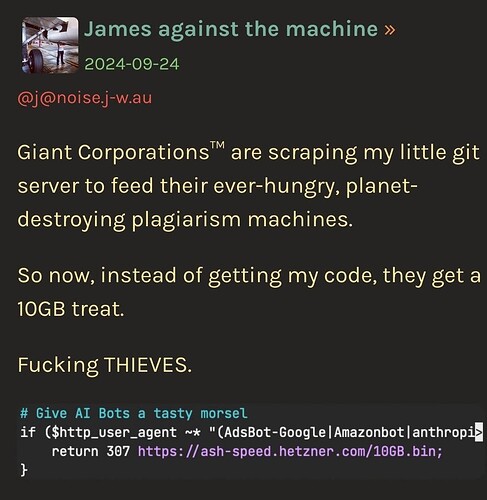

This begs the question: how do you feel about your written and visual content feeding these models / "AI"s? As a platform, should we do anything to proactively limit what these companies are building on top of your intellectual property, or does it not matter? (Despite any opinions I may have on the whole, any decision around this is totally up to the community – that’s why I’m opening up the discussion.)

Of course, this also applies across all the products in our suite. Large language models (LLMs) like ChatGPT crawl your Write.as posts as well as your social interactions on Remark.as and Writing Exchange. And image synthesis models like DALL-E and Midjourney could “train” on your Snap.as photos and visual creations. So I’d like to think about how this affects all our tools, and if our approach might differ between them or not.

Please feel free to discuss and let me know what you think!